In today’s interconnected world, the digital landscape is constantly evolving. With the proliferation of devices, applications, and networks, the opportunities for cyberattacks have grown exponentially. From malware and ransomware to sophisticated state-sponsored attacks, the threats are diverse and constantly changing. As these threats evolve, so too must our defense mechanisms. Enter the world of neural networks and deep learning—a revolutionary approach to cybersecurity.

The Digital Battlefield

The modern digital realm can be likened to a battlefield. On one side, we have businesses, governments, and individuals striving to protect their data and maintain the integrity of their systems. On the other side, cybercriminals, hackers, and even rogue states are constantly devising new methods to breach defenses and achieve their malicious objectives. The stakes are high, with potential consequences ranging from financial losses and reputational damage to national security threats.

Neural Networks: The New Guardians

Neural networks, inspired by the human brain’s interconnected neuron structures, represent a paradigm shift in how we approach cybersecurity. Traditional defense mechanisms, such as firewalls and signature-based antivirus solutions, rely on predefined rules and known threat signatures. While effective against known threats, they often falter when faced with new, unknown attack vectors.

In contrast, neural networks, especially those enhanced with deep learning algorithms, can learn, adapt, and evolve. They can be trained to recognize patterns, anomalies, and even predict potential threats based on historical data. This ability to learn from past incidents and adapt to new challenges makes them particularly suited to the dynamic world of cybersecurity.

| Aspect | Traditional Methods | Neural Network-based Methods |

|---|---|---|

| Learning Ability | Static (Rule-based) | Dynamic (Adaptive) |

| Threat Recognition | Known Signatures | Pattern and Anomaly Detection |

| Adaptability | Limited | High (Continuous Learning) |

| Response Time | Varies | Rapid (Real-time Analysis) |

The Promise of a Safer Future

As cyber threats grow in complexity, the promise of neural networks in cybersecurity shines brightly. By leveraging the power of machine learning and artificial intelligence, we are not just reacting to threats but proactively predicting and mitigating them. The fusion of technology and intelligence offers a beacon of hope in the ever-challenging realm of cybersecurity.

The Evolution of Neural Networks in Cybersecurity

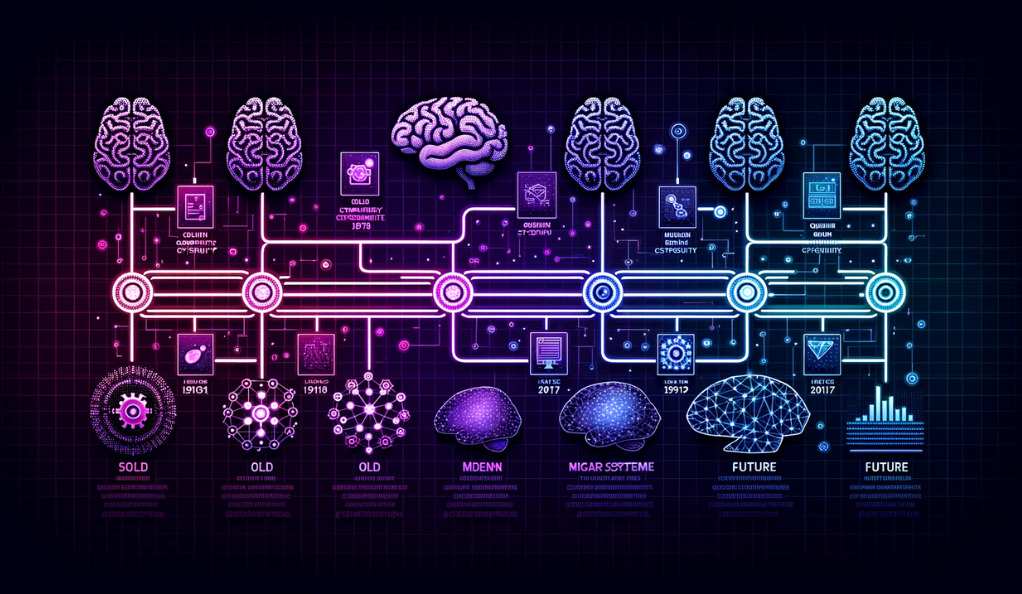

From Perceptrons to Deep Neural Networks

The journey of neural networks in cybersecurity mirrors their evolution in the broader field of artificial intelligence. The earliest form of neural networks, known as perceptrons, were introduced in the 1950s. These were simple models capable of binary classifications. However, their limited capabilities meant they were soon overshadowed by other AI techniques.

The resurgence of neural networks began in the 1980s and 1990s, with the development of backpropagation algorithms, which allowed networks to adjust their weights based on the error of their predictions. This marked the beginning of a new era, where neural networks could be trained more effectively on complex datasets.

The real game-changer came with the advent of deep learning in the 2000s. Deep neural networks, with their multiple layers, could process vast amounts of data, recognize intricate patterns, and make highly accurate predictions. Their application in image and speech recognition set the stage for their foray into cybersecurity.

Neural Networks Meet Cybersecurity

As cyber threats became more sophisticated, the limitations of traditional defense mechanisms became evident. The static nature of signature-based systems was ill-equipped to handle zero-day attacks and advanced persistent threats. The cybersecurity community began to explore alternative solutions, and neural networks emerged as a promising candidate.

Early applications of neural networks in cybersecurity focused on intrusion detection systems (IDS). These systems were trained on network traffic data, learning to differentiate between normal and malicious activities. Over time, as the technology matured, neural networks found applications in various other areas of cybersecurity, including malware detection, phishing email identification, and even predicting potential future threats.

| Time Period | Neural Network Type | Application in Cybersecurity |

|---|---|---|

| 1950s | Perceptrons | Limited to Basic Classifications |

| 1980s-1990s | Multi-layer Perceptrons | Intrusion Detection (Early Stages) |

| 2000s-Present | Deep Neural Networks | Advanced IDS, Malware Detection, Phishing Identification, Threat Prediction |

Challenges and Triumphs

While the integration of neural networks into cybersecurity brought numerous advantages, it wasn’t without challenges. Training deep neural networks required vast amounts of data, powerful computational resources, and significant time. Additionally, ensuring that these models were robust against adversarial attacks became a priority.

However, the triumphs outweighed the challenges. Neural networks’ ability to continuously learn and adapt made them invaluable assets in the ever-evolving landscape of cyber threats. Their predictive capabilities meant that organizations could transition from a reactive to a proactive defense stance, anticipating threats before they materialized.

Challenges in Implementing Neural Networks for Intrusion Detection

The Intricacies of Hyperparameter Tuning

One of the primary challenges in deploying neural networks for intrusion detection is hyperparameter tuning. Hyperparameters, unlike model parameters, are not learned during training but are set beforehand. These include learning rates, batch sizes, and the number of layers or neurons in the network. Selecting the right combination can significantly impact the model’s performance. Too many neurons might lead to overfitting, where the model performs exceptionally well on training data but poorly on unseen data. Conversely, too few neurons might result in underfitting, where the model fails to capture the underlying patterns in the data.

Balancing the Dataset: The Class Imbalance Problem

Intrusion detection datasets often suffer from class imbalance. This means that the number of benign (non-malicious) samples significantly outweighs the number of malicious samples. Training a neural network on such a dataset can lead the model to become biased towards predicting the majority class, resulting in a high number of false negatives. Techniques like oversampling, undersampling, and synthetic data generation are employed to address this issue, but they come with their own set of challenges.

Securing Neural Networks from Adversarial Attacks

While neural networks are being used to detect and prevent cyber threats, they themselves can become targets. Adversarial attacks involve feeding maliciously crafted input to the neural network, causing it to make incorrect predictions or classifications. These inputs are designed to be indistinguishable from regular inputs, making them particularly hard to detect. Ensuring the robustness of neural networks against such attacks is crucial, especially when they are at the frontline of cybersecurity defenses.

Navigating Non-Technical Challenges

Beyond the technical hurdles, implementing neural networks in intrusion detection systems presents societal, ethical, and legal challenges. For instance, the “black box” nature of deep learning models can make it difficult to explain their decisions, leading to trust issues. Moreover, as these systems monitor network traffic, concerns about user privacy and data protection come to the forefront. Balancing the need for security with respecting individual rights becomes a delicate act.

The Power of Deep Learning in Intrusion Detection

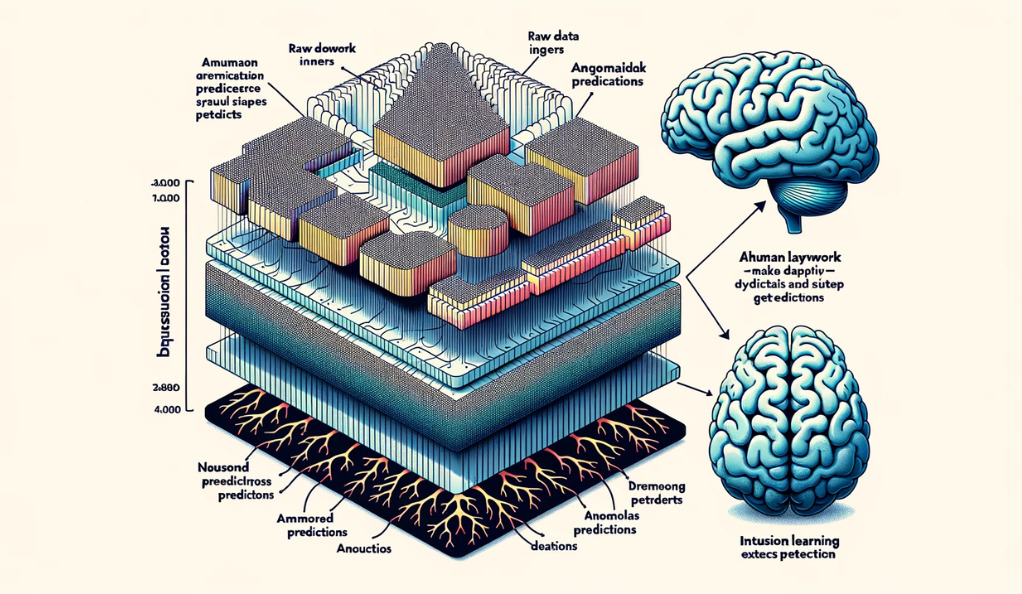

Deep Learning: Beyond Traditional Neural Networks

Deep learning, a subset of machine learning, involves neural networks with three or more layers. These neural networks attempt to simulate the behavior of the human brain—allowing it to “learn” from large amounts of data. While a neural network with a single layer can make approximate predictions, additional hidden layers can help to refine these predictions.

In the context of intrusion detection, deep learning can sift through vast amounts of network traffic data, identifying patterns and anomalies that might be indicative of a cyber threat. The depth of these networks allows for the extraction of intricate features from raw data, which might be overlooked by shallow networks or human analysts.

Enhanced Detection Capabilities

The primary advantage of using deep learning in intrusion detection is its enhanced detection capabilities. Traditional intrusion detection systems rely on predefined signatures of known threats. In contrast, deep learning models can identify zero-day vulnerabilities and sophisticated attack vectors by recognizing patterns and anomalies in the data. This proactive approach can potentially stop cyber threats before they inflict damage.

Real-time Analysis and Response

Deep learning models, once trained, can analyze vast amounts of data in real-time. This rapid analysis is crucial for intrusion detection, where timely detection and response can mean the difference between a minor security incident and a major breach. By continuously monitoring network traffic and making real-time predictions, deep learning models can trigger immediate alerts or countermeasures upon detecting malicious activity.

Limitations and Considerations

While deep learning offers significant advantages, it’s not without limitations. Training deep learning models requires substantial computational power and large labeled datasets. There’s also the risk of overfitting, where the model becomes too tailored to the training data and performs poorly on new, unseen data.

Moreover, the complexity of deep learning models can make them difficult to interpret. This lack of transparency, often referred to as the “black box” problem, can pose challenges in scenarios where understanding the rationale behind decisions is crucial.

Adversarial Attacks and Neural Network Security

Understanding Adversarial Attacks

In the realm of deep learning, adversarial attacks present a unique and formidable challenge. These attacks involve inputting specially crafted data into a neural network, causing it to make an incorrect prediction or classification. The perturbations made to the data are often so subtle that they’re imperceptible to the human eye, yet they can drastically alter the network’s output.

For instance, in the context of image recognition, an adversarial attack might involve adding a slight, almost invisible noise to an image of a cat, causing a neural network to misclassify it as a dog. In cybersecurity, such attacks can have more dire consequences, such as bypassing intrusion detection systems.

Why Neural Networks are Vulnerable

The susceptibility of neural networks to adversarial attacks is rooted in their design. These models are trained to minimize the error on their training data, making them sensitive to specific input patterns. Adversaries exploit this sensitivity by finding input perturbations that lead to incorrect outputs.

Moreover, the high-dimensional nature of the data that neural networks process, especially in deep learning models, provides adversaries with numerous avenues to introduce malicious perturbations.

Defending Against Adversarial Attacks

Protecting neural networks from adversarial attacks is an active area of research. Some of the prominent defense strategies include:

- Adversarial Training: This involves training the neural network on adversarial examples, making it more robust against such attacks.

- Input Preprocessing: Techniques like image denoising or feature squeezing can be used to remove adversarial perturbations from the input data.

- Model Ensembling: Using multiple models and aggregating their predictions can increase the difficulty for adversaries to craft successful attacks.

The Ongoing Battle

While defense mechanisms are continually evolving, so are adversarial attack techniques. It’s an ongoing cat-and-mouse game between defenders and attackers. As neural networks become more integral to cybersecurity, ensuring their robustness against adversarial threats becomes paramount.

Ethical and Societal Implications of Neural Networks in Cybersecurity

The Trust Paradox

As neural networks become more integrated into cybersecurity solutions, a paradox emerges: while these models offer enhanced protection and predictive capabilities, their inherent complexity can lead to mistrust. The “black box” nature of deep learning models, where the decision-making process is not transparent, poses challenges. In a domain where understanding the rationale behind decisions can be crucial, especially in the aftermath of a security incident, this lack of transparency can be problematic.

Privacy Concerns in a Networked World

Neural networks, especially in intrusion detection systems, often require access to vast amounts of data to function effectively. This data can include network traffic, user behaviors, and even content of communications in some cases. The collection and processing of such data raise significant privacy concerns. Balancing the need for comprehensive data analysis for security purposes with the rights of individuals to privacy is a delicate act that organizations must navigate.

Bias and Fairness

Like all machine learning models, neural networks are only as good as the data they’re trained on. If this data contains biases, the model will likely inherit and perpetuate them. In the context of cybersecurity, biased models could lead to unfair targeting or misclassification of certain types of network traffic or user behaviors. Ensuring fairness and eliminating biases in neural network models is essential to maintain trust and ensure equitable treatment.

Accountability and Liability

With neural networks making critical decisions in real-time, questions of accountability arise. If a neural network fails to detect a cyber threat, leading to a breach, who is held accountable? Is it the developers of the model, the organization deploying it, or the technology itself? Establishing clear frameworks for accountability in AI-driven cybersecurity solutions is crucial.

The Future of Neural Networks in Cybersecurity

Emerging Neural Network Architectures

As research in the field of neural networks continues to advance, new architectures and paradigms are emerging. These include Generative Adversarial Networks (GANs), which can generate synthetic data, and Transformer-based models, known for their prowess in natural language processing. The application of these advanced architectures in cybersecurity can open up new avenues for threat detection, data synthesis for training, and even simulating cyber-attack scenarios to test defenses.

Quantum Computing and Neural Networks

The advent of quantum computing promises to revolutionize various fields, including artificial intelligence. Quantum neural networks, which leverage the principles of quantum mechanics, can potentially process information at unprecedented speeds. In cybersecurity, this could translate to real-time analysis of massive datasets, quicker threat detection, and instantaneous response actions.

Federated Learning: A New Paradigm

With growing concerns about data privacy, federated learning offers a potential solution. Instead of centralizing data for training neural networks, federated learning allows for model training across multiple devices or nodes, keeping data localized. This approach not only addresses privacy concerns but also offers resilience against data breaches.

Neural Networks and Human Collaboration

While neural networks offer automation and efficiency, the human element in cybersecurity remains irreplaceable. The future will likely see a more collaborative approach, where neural networks work alongside human experts. Such a partnership can combine the predictive power of neural networks with the intuitive and creative problem-solving abilities of humans.

Conclusion

The digital realm of cybersecurity is ever-evolving, with both challenges and innovations emerging at a rapid pace. Neural networks have ushered in a new era of advanced threat detection and proactive defense. However, they also present new vulnerabilities, exemplified by adversarial attacks.

Despite the technological leaps, the human aspect remains irreplaceable. The true strength of cybersecurity lies in the synergy between advanced algorithms and human intuition. As we move forward, the integration of neural networks in cybersecurity will deepen, but it’s crucial to harness them responsibly.

In essence, the relationship between neural networks and cybersecurity is a delicate balance of innovation and responsibility. The journey ahead promises advancements, but with them comes the imperative of ethical and effective application. The goal remains clear: a safer, more secure digital landscape for all.