In today’s fast-paced digital age, the quest for convenience and efficiency has led to the rise of virtual assistants, commonly referred to as “smart assistants.” These AI-driven entities have seamlessly integrated into our daily lives, offering hands-free solutions to a myriad of tasks, from setting reminders to controlling smart home devices. But what exactly are these smart assistants, and how have they become such integral parts of our digital ecosystem?

What is a Smart Assistant?

A smart assistant, at its core, is a software agent designed to perform tasks or services for an individual based on commands or queries. These commands can be text-based, but with the advent of advanced voice recognition technologies, verbal interactions have become the norm. Think of smart assistants as your personal digital butler, always ready to assist, whether it’s playing your favorite song, updating your calendar, or even ordering groceries online.

The Pioneers of Voice Assistance

The concept of voice-activated assistance isn’t new. In fact, the journey began as early as the 1920s with toys like “Radio Rex,” a wooden dog that would respond when its name was called. Fast forward to the 1960s, and we saw IBM introduce the “Shoebox,” a primitive computer that could recognize spoken digits. However, the real game-changer arrived in the 2010s with the introduction of sophisticated virtual assistants like Apple’s Siri, Amazon’s Alexa, and Google Assistant.

| Smart Assistant | Developer | Initial Release |

|---|---|---|

| Siri | Apple Inc. | 2011 |

| Alexa | Amazon | 2014 |

| Google Assistant | 2016 |

The Rise in Popularity

The success of smart assistants can be attributed to their ability to mimic human conversation. By leveraging technologies such as Natural Language Processing (NLP) and machine learning, these assistants can understand, learn from, and respond to user commands in a conversational manner. Their integration into smartphones, smart speakers, and even household appliances has further propelled their adoption. According to a recent study, the number of frequent users of digital virtual assistants is estimated to be around 1 billion worldwide.

The Evolution of Voice-Activated Devices

The journey of voice-activated devices is a testament to human ingenuity and the relentless pursuit of technological advancement. From rudimentary voice recognition systems to today’s sophisticated AI-driven assistants, the evolution has been nothing short of revolutionary.

From Humble Beginnings to Digital Domination

The initial foray into voice recognition was marked by simple devices with limited capabilities. As mentioned earlier, toys like “Radio Rex” and IBM’s “Shoebox” were the precursors. However, these devices were constrained by their limited vocabulary and the need for precise pronunciation.

The 1980s and 1990s saw significant strides in voice recognition technology. Systems like Dragon Dictate were introduced, allowing users to convert spoken words into text, albeit with a hefty price tag and the need for user training. But it was the dawn of the 21st century that truly marked the era of voice-activated digital assistants.

The Modern Titans of Voice Technology

With the introduction of Apple’s Siri in 2011, the landscape of voice technology underwent a seismic shift. Siri’s ability to understand natural language and provide context-aware responses set the benchmark for future voice assistants. Soon, Amazon’s Alexa and Google Assistant entered the fray, each bringing its unique features and capabilities.

| Year | Milestone |

|---|---|

| 2011 | Apple introduces Siri on the iPhone 4S. |

| 2014 | Amazon launches Echo with Alexa. |

| 2016 | Google unveils Google Assistant. |

| 2018 | Integration of voice assistants in smart TVs. |

| 2020 | Voice assistants become standard in many vehicles. |

The Power of Integration

The true potential of voice-activated devices was realized when they began integrating with other technologies. Smart homes equipped with voice-controlled lighting, thermostats, and security systems became a reality. The convenience of asking a voice assistant to play music, set reminders, or even make a reservation at a restaurant was unparalleled.

Moreover, the integration extended beyond homes. Cars equipped with voice assistants allowed drivers to navigate, make calls, and control music without taking their hands off the wheel. Wearable devices, too, began leveraging voice technology, enabling users to access information and perform tasks on the go.

Challenges and Criticisms

While the evolution of voice-activated devices has brought immense convenience, it hasn’t been without its challenges. Concerns regarding data privacy, eavesdropping, and the potential misuse of recorded conversations have been at the forefront. As these devices become more ingrained in our daily lives, addressing these concerns becomes paramount.

Capabilities and Interactions

Voice-activated devices, with their seemingly magical ability to understand and respond to our commands, have become a staple in many households and businesses. But how do these devices process our requests, and what technologies underpin their capabilities?

Understanding the Spoken Word

At the heart of any voice-activated device is its ability to recognize and interpret human speech. This process, known as Automatic Speech Recognition (ASR), involves converting the spoken word into text. ASR systems are trained on vast datasets, encompassing various accents, dialects, and languages, ensuring a broad understanding of human speech.

Natural Language Processing: Making Sense of Speech

Once the spoken word is converted into text, the next challenge is to derive meaning from it. Enter Natural Language Processing (NLP). NLP is a branch of artificial intelligence that focuses on the interaction between computers and humans through natural language. It enables machines to understand, interpret, and generate human language in a way that is both meaningful and contextually relevant.

For instance, when you ask your smart assistant, “Will it rain tomorrow?”, NLP algorithms parse the sentence structure, identify the main subject (rain) and the time reference (tomorrow), and then provide a relevant response based on available weather data.

Machine Learning: The Learning Curve

One of the standout features of modern smart assistants is their ability to learn from user interactions. Machine learning, a subset of AI, allows these devices to adapt and improve over time. By analyzing patterns in user behavior and preferences, smart assistants can offer more personalized and accurate responses.

For example, if you frequently ask your assistant to play jazz music in the evenings, over time, it might proactively suggest jazz playlists or inform you about upcoming jazz concerts in your area.

The Multimodal Experience

While voice remains the primary mode of interaction, many smart assistants now offer multimodal experiences, combining voice with visual or tactile feedback. Devices with screens, like the Amazon Echo Show or Google Nest Hub, can display relevant visuals, enhancing user interactions. Asking for a recipe might not only yield a spoken response but also a step-by-step video tutorial.

Privacy and Data Handling

As impressive as these capabilities are, they come with inherent challenges, especially concerning user data. Every command given to a smart assistant is processed, often in remote data centers. This raises questions about data storage, encryption, and potential misuse. Leading companies have made strides in addressing these concerns, with features like local voice processing and the ability for users to review and delete their voice history.

The Double-Edged Sword: Convenience vs. Privacy

In the digital age, the trade-off between convenience and privacy has become a central debate. Voice-activated devices, with their always-listening capabilities, sit squarely at the intersection of this discussion. While they offer unparalleled ease and efficiency, they also raise significant concerns about user privacy and data security.

Always Listening: A Necessary Evil?

For a voice-activated device to respond promptly to a user’s command, it needs to be in an “always listening” mode. This means that the device is constantly processing ambient sounds, waiting for its wake word (e.g., “Hey Siri” or “Alexa”). Once the wake word is detected, the device starts recording and processing the subsequent command.

While this feature is essential for the device’s functionality, it has led to concerns about inadvertent recordings and potential eavesdropping. Stories of private conversations being recorded and sent to random contacts or snippets of dialogues being saved have made headlines, causing unease among users.

How Voice Data is Processed and Stored

When a command is given to a smart assistant, the voice snippet is often sent to remote servers for processing. These servers analyze the command, determine the appropriate response, and send it back to the device. The voice data, in many cases, is stored temporarily to improve the system’s accuracy and responsiveness.

Major tech companies assert that this data is anonymized and stripped of personally identifiable information. However, the very act of storing voice recordings, even temporarily, raises red flags for privacy advocates.

Potential Misuse of Voice Data

The storage of voice data presents a tempting target for cybercriminals. A breach could potentially expose sensitive personal information. Moreover, there’s the concern of companies using this data for targeted advertising. While many companies deny using voice recordings for ads, the mere possibility has led to calls for stricter regulations and transparency.

User Control and Transparency

In response to these concerns, leading tech companies have introduced measures to give users more control over their voice data. Features like the ability to review, listen to, and delete voice recordings are now standard. Some companies have gone a step further, introducing local voice processing, ensuring that voice commands are analyzed on the device itself, reducing the need to send data to external servers.

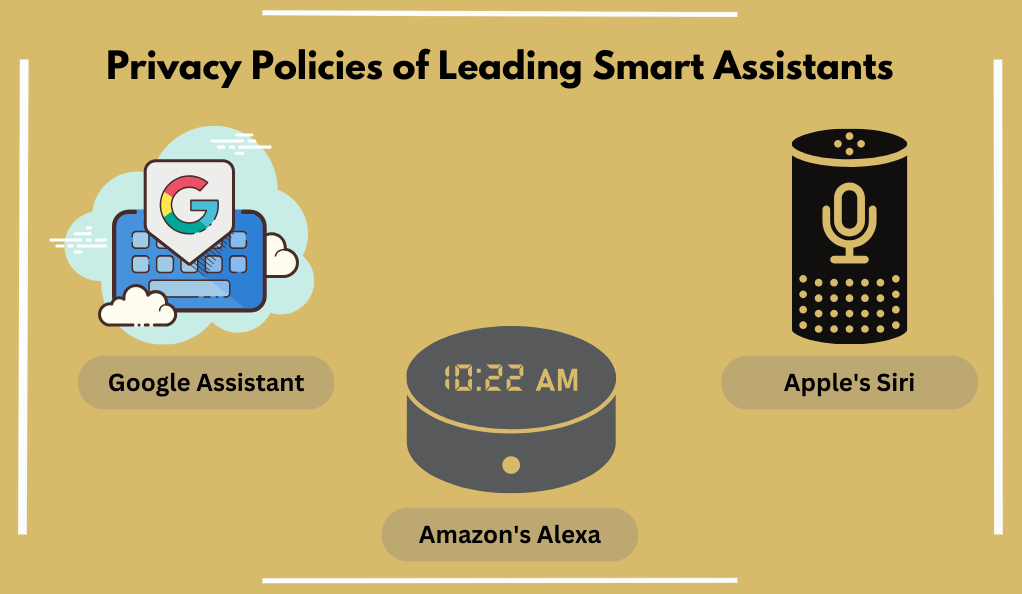

Privacy Policies of Leading Smart Assistants

As voice-activated devices become more ubiquitous, understanding the privacy policies of the major players in the industry is crucial. Each company approaches user data and privacy differently, and being informed can help users make better decisions about which devices to use and how to use them.

Google Assistant

Google’s Approach: Google emphasizes transparency and control when it comes to user data. They have made efforts to explain how data is used to improve services and provide personalized experiences.

Data Handling: Voice queries made to Google Assistant are sent to Google servers for processing. However, Google has introduced features like on-device processing for some tasks, reducing the need to send data externally.

User Control: Users can access their Google account to review and delete voice activity. Google also offers an auto-delete option, where users can choose to have their data automatically deleted after 3, 18, or 36 months.

Amazon’s Alexa

Amazon’s Approach: Amazon’s primary focus is on user trust. They aim to provide clarity on how Alexa uses voice recordings and offer users control over their data.

Data Handling: Voice recordings are stored in the cloud to improve Alexa’s understanding and responsiveness. However, Amazon has been working on local voice processing for specific tasks.

User Control: Amazon provides a clear dashboard where users can review, listen to, and delete voice recordings. They’ve also introduced commands like, “Alexa, delete what I just said,” giving users real-time control over their data.

Apple’s Siri

Apple’s Approach: Apple has always positioned itself as a champion of user privacy. With Siri, they emphasize on-device processing and minimal data collection.

Data Handling: Most of Siri’s processing happens directly on the device, reducing the need to send data to Apple servers. When data is sent, it’s anonymized and not linked to the user’s Apple ID.

User Control: Apple offers a comprehensive settings menu where users can control how Siri uses their data. They’ve also introduced an opt-in program where users can choose to share voice recordings to improve Siri, ensuring user consent.

The Ethical Dilemma: Microwork and Data Labeling

Behind the seamless interactions and accurate responses of voice-activated devices lies a lesser-known aspect of AI development: microwork and data labeling. This process, essential for training AI models, involves human workers reviewing and annotating vast amounts of data. While it’s a cornerstone of AI’s success, it also raises several ethical concerns.

Microwork: The Human Touch in AI

Microwork refers to small, task-based jobs that individuals complete for AI companies. In the realm of voice-activated devices, this often involves listening to short voice clips and transcribing or labeling them. These annotated datasets are then used to train and refine AI models, ensuring they understand and respond accurately to user commands.

The Value of Data Labeling

Data labeling is crucial for the success of any AI system. For voice assistants, this means understanding different accents, dialects, languages, and nuances in human speech. By having real humans review and annotate voice data, companies can ensure their AI models are robust and versatile.

Ethical Concerns

While microwork and data labeling are essential, they come with a set of ethical challenges:

- Privacy: Even if voice clips are anonymized, there’s potential for workers to hear sensitive or personal information. This raises concerns about user privacy and data protection.

- Working Conditions: Many microworkers operate in a gig economy setup, often without the benefits and protections of traditional employment. There are concerns about fair pay, job security, and the potential for exploitation.

- Transparency: Users are often unaware that their voice commands might be reviewed by humans. While companies argue this is essential for improving services, the lack of transparency can be unsettling for many.

Steps Towards Ethical Microwork

Recognizing these concerns, some companies have taken steps to ensure ethical practices in microwork:

- Clear Disclosures: Informing users that their data might be reviewed by humans and giving them the option to opt-out.

- Better Anonymization: Ensuring that voice clips sent for review don’t contain personally identifiable information.

- Fair Treatment of Workers: Ensuring microworkers are compensated fairly and have decent working conditions.

Securing Your Smart Assistant

As voice-activated devices become more integrated into our daily routines, ensuring their security is paramount. From safeguarding personal data to preventing unauthorized access, users need to be proactive in protecting themselves and their devices. Here’s a comprehensive guide to bolstering the security of your smart assistant.

Understanding the Risks

Before diving into security measures, it’s essential to grasp the potential risks associated with voice-activated devices:

- Unauthorized Access: Without proper security, someone could potentially access your device and issue commands, from playing music to unlocking smart doors.

- Eavesdropping: There’s the potential for malicious entities to tap into your device’s microphone, listening in on private conversations.

- Data Breaches: Your voice commands, when processed and stored, could be exposed in the event of a data breach.

Best Practices for Enhanced Security

- Strong Passwords: Ensure that any associated accounts with your voice-activated device have strong, unique passwords. Regularly updating these passwords can also bolster security.

- Enable Two-Factor Authentication: Where available, enable two-factor authentication for added account security. This often involves receiving a code on your phone that you’ll need to input along with your password.

- Regular Software Updates: Manufacturers frequently release software updates that address security vulnerabilities. Ensure your device’s firmware and associated apps are always up-to-date.

- Mute When Not in Use: Most smart assistants come with a mute button that disables the microphone. If you’re discussing sensitive information or simply want added privacy, use this feature.

- Review and Delete Voice Recordings: Periodically review and clear out your voice command history. This not only protects your privacy but also ensures malicious actors can’t access past commands.

- Limit Integrations: Be judicious about which third-party apps and services you link to your voice assistant. Each integration is a potential vulnerability.

- Voice Recognition: Some devices offer voice recognition features, ensuring that only recognized voices can issue commands. Activate this feature if available.

- Secure Your Network: Ensure that your home Wi-Fi network, which your device is likely connected to, is secure. Use strong encryption, regularly update your router’s firmware, and change default login credentials.

Conclusion

Voice-activated devices have revolutionized our digital interactions, offering unparalleled convenience. However, with this innovation comes challenges, especially concerning privacy and security. As we stand on the brink of a more integrated voice-activated era, it’s crucial to balance technological advancements with ethical considerations. The future promises devices that are more intuitive and anticipatory, but it’s our collective responsibility to ensure they’re used safely and transparently. In essence, as we converse more with our digital assistants, building trust and understanding will be paramount.